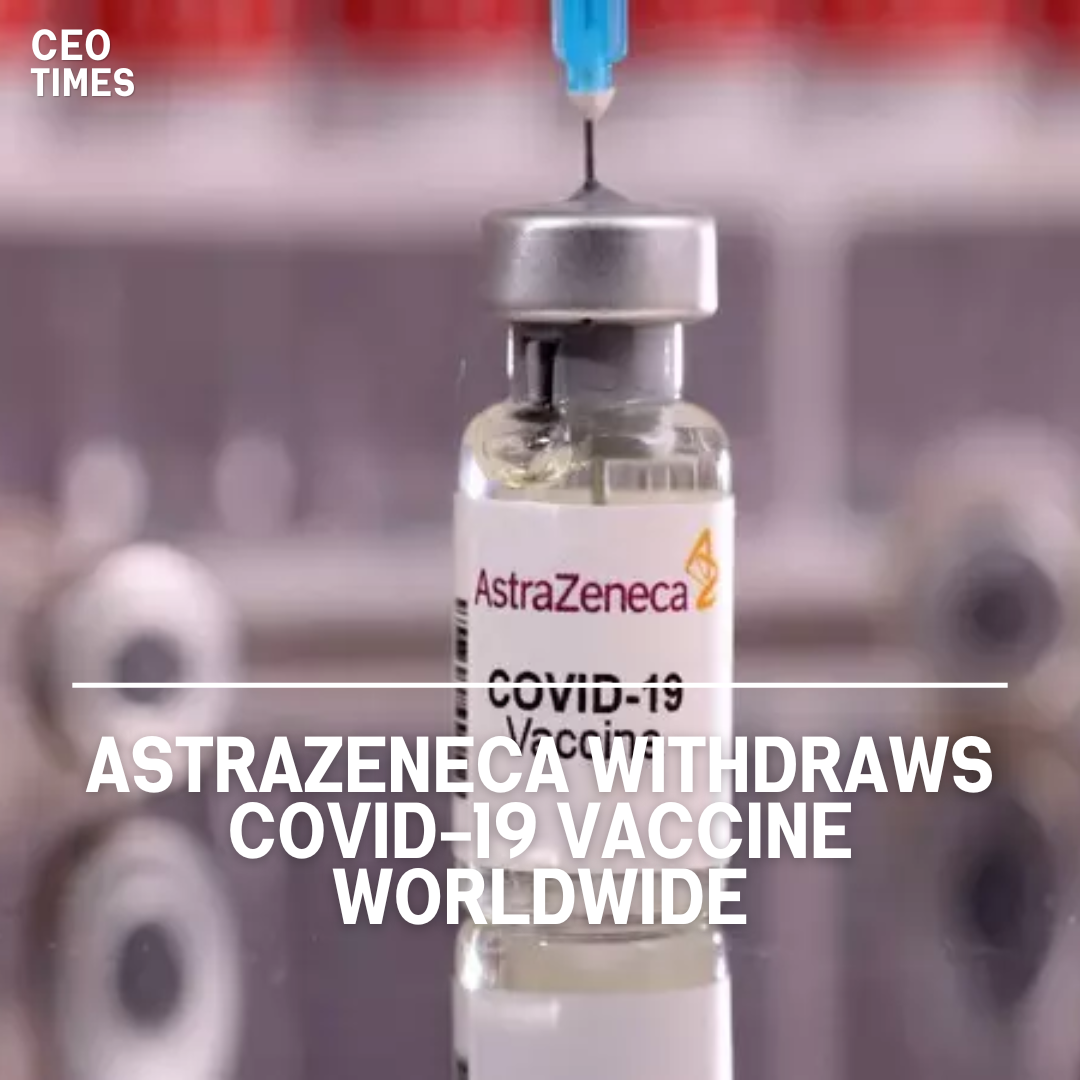

OpenAI, backed by Microsoft, has launched a new tool designed to detect images created by its text-to-image generator, DALL-E 3.

The move arrives amidst growing worries about the influence of AI-generated content on global elections, with fears of misinformation and manipulation.

Detection Accuracy and Capabilities:

According to OpenAI, the tool has demonstrated an internal testing accuracy rate of about 98%. It is capable of identifying images generated by DALL-E 3 even after common modifications such as compression, cropping, and saturation changes, with minimal impact on its performance.

Tamper-Resistant Watermarking:

In addition to image detection, OpenAI plans to implement tamper-resistant watermarking to mark digital content such as photos or audio. These watermarks aim to provide a signal that is difficult to remove, enhancing the authenticity and traceability of media content.

Collaboration and Industry Standards:

OpenAI has joined an industry group that includes major tech companies like Google, Microsoft, and Adobe to establish standards for tracing the origin of different media types.

This collaborative effort underscores the importance of addressing the challenges posed by AI-generated content in maintaining the integrity of digital information.

Election Concerns and Misinformation:

The report comes amid worries over the spread of AI-generated content and deepfakes during elections, as witnessed in countries like India, the U.S., Pakistan, and Indonesia.

Instances of online fake videos, particularly targeting political figures, highlight the urgency for effective detection and mitigation measures.

Societal Resilience Fund:

In collaboration with Microsoft, OpenAI is launching a $2 million “societal resilience” fund to support AI education initiatives.

This fund seeks to enhance public awareness and understanding of AI technologies, empowering individuals to navigate the evolving landscape of digital media responsibly.