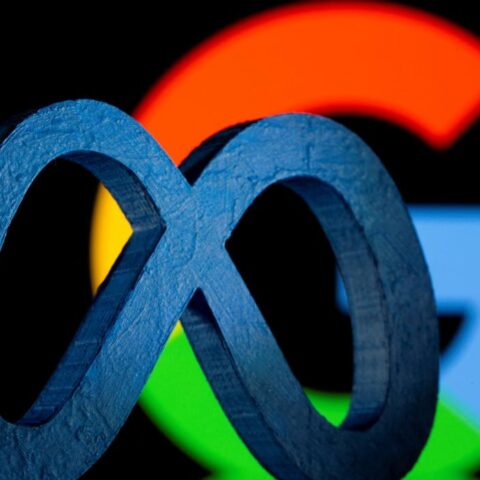

Meta, Instagram’s parent company, announced on Thursday its plans to test new features aimed at protecting teenagers from harmful content and potential scammers.

Amid mounting concerns over social media’s impact on mental health, Meta is implementing measures to address these issues and enhance safety for young users.

Protection Feature for Direct Messages:

Instagram will introduce a protection feature for direct messages, utilizing on-device machine learning to analyze messages for nudity. This feature, which will be allowed by default for users under 18, aims to prevent teenagers from encountering inappropriate content and potential risks.

Notably, the nudity protection feature will function even in end-to-end encrypted chats, ensuring privacy while maintaining safety.

Meta emphasizes that it will not have access to these images unless users report them, highlighting its commitment to user privacy and security.

Combatting Sextortion Scams:

In addition to nudity detection, Meta is developing technology to identify accounts engaged in sextortion scams.

The company is also testing pop-up messages to educate users who may have interacted with such accounts, aiming to mitigate the risks associated with online exploitation.

Continued Efforts to Protect Teens:

Meta’s initiatives to enhance safety extend beyond Instagram. The company plans to hide sensitive content from teens on Facebook and Instagram.

These efforts align with the firm’s commitment to addressing concerns raised by regulators and advocacy groups regarding the impact of social media on young users’ well-being.

Legal and Regulatory Scrutiny:

Meta faces legal challenges, including lawsuits from U.S. states alleging misleading disclosures about platform risks.

In Europe, regulatory scrutiny focuses on Meta’s measures to protect children from harmful content, reflecting broader concerns about online safety and accountability.